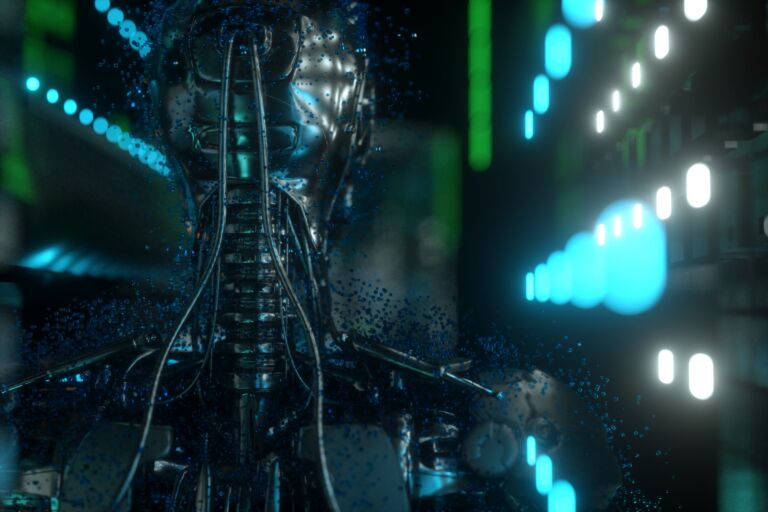

Artificial Intelligence: What are the 5 major cyber threats for 2024?

Continue reading

3

4

The recent deepfake scam targeting an employee of a Chinese company, which cost the multinational corporation 26 million euros, perfectly illustrates the dangers of malicious AI usage. Following an unprecedented democratization, AI applications are booming in various fields such as healthcare, industry, energy, finance… This revolution raises numerous concerns and questions about potential dangers, especially regarding cybersecurity. Some cyber threats are still emerging, while others are already very real.

Advancement of deepfakes for large-scale attacks

Described as one of the most dangerous uses of AI by many researchers, the use of deepfakes saw unprecedented growth in 2023. According to a study by Onfido, attempts of fraud using deepfakes increased by 3,000% over the past year, and this trend is expected to continue, particularly in financial scams. Although convincing deepfakes are currently rare, their creation is becoming simpler and less costly, leading to the imminent threat of large-scale attacks.

Deep scams: Between automation and proliferation of scams

Deep scams, not necessarily involving audiovisual manipulation, refer to the scale of scams that, through automation, can target a vast number of victims. For every manual scam, there’s an automated version applicable to various malicious operations such as phishing, romance scams, real estate frauds, etc. Instead of targeting just a few victims at a time, these scams can target thousands.

Malware using Large Language Models (LLMs)

Through research, three worms using LLMs capable of rewriting their own code with each replication have been identified. By exploiting OpenAI’s API, worms can leverage GPT to generate different code for each infected target, making detection particularly challenging. Although OpenAI maintains a blacklist of malicious behaviors, if the LLM is directly downloaded onto a personal server, blacklisting becomes impossible, making closed-source generative AI systems safer.

Democratization of 0-day exploits

This refers to malicious exploitation of a software vulnerability before developers identify or fix it. Using an AI assistant can help in identifying flaws in code for correction, but it can also be used to identify flaws for exploit creation. Although currently uncommon, this misuse is likely to occur soon.

Fully automated malware as the ultimate threat

Hackers may soon resort to fully automated campaigns, with potentially disastrous consequences. The advent of 100% automated malware is considered the most significant security threat for 2024.

While the rise of AI presents unique opportunities for many sectors, the emergence of such a powerful and revolutionary technology continues to arouse the interest of cybercriminals. In the long term, the development of Artificial General Intelligence (AGI) could pose the primary threat to humanity, with the potential creation of superintelligent machines posing a genuine danger. While optimism about the positive impact of AI is warranted, it’s essential to consider the possibility of humanity being surpassed by a more intelligent species in the future.