- Home

- Risks management

- Child privacy and protection: finding the right balance in the fight against dangerous sexual content

Child privacy and protection: finding the right balance in the fight against dangerous sexual content

To combat a variety of threats, technology companies and messaging platforms use encryption to keep conversations private. However, this can be counterproductive to protecting children and minors, for example when it comes to violent and sexual content and child abuse.

In 2022, the European Commission shared a new digital strategy to protect children online that included three new measures. The first measure’s objective was to protect children from online harassment as well as illegal sexual content and child predators by establishing a standard for online age checks.

This decision is still the subject of bitter debate between supporters of strict privacy and those in favor of a more flexible approach to protecting the most vulnerable, at a time when the European “Child Sexual Abuse Regulation” (CSAR) is currently under discussion. At the 2024 InCyber Forum, a round table gathered several technology and child protection experts to try to identify areas of convergence that could protect children without infringing on their right to privacy.

A surge in sex-related threats

A recent estimate suggests that some 88 million photos and videos of sexually abused children were circulating online in 2022. Socheata Sim, head of the social mission in France for Cameleon, an NGO that has been fighting child abuse for 26 years in France and the Philippines, has drawn a worrying picture by identifying four trends.

First, there is “grooming”, which is an activity in which children are contacted to play games with a sexual connotation. The predator poses anonymously as another child to get them to share private information and push them to commit illegal acts.

Another common practice is the suborning of minors. The abuser sends a warning link to a young person, telling them not to click on it. Which the latter often does out of curiosity before being shown pornographic images and videos. This has harmful effects, as Hervé Le Jouan, an entrepreneur specializing in technological security, points out. “On average, 66% of 11–13-year-olds have been exposed to this type of content at least once. A third can become addicted, lose control of their emotions and isolate themselves. This addiction can continue into adulthood.”

A third danger also lurks: “sextortion”. Teenagers often exchange naked photos of themselves when flirting. This can become a problem if a sexual predator gets their hands on it and threatens to publicly disclose it if the teenager does not carry out the predator’s desires.

Finally, there are “live streaming” sessions (particularly on instant messaging platforms) where children and teenagers can see sex acts, torture scenes or particularly violent videos of suicide. According to WeProtect Global Alliance, an NGO that campaigns against child sex abuse, two-thirds of 18-year-olds in France have seen such images at some point during their childhood.

More flexible rules for better protection?

In the face of these challenges, Tijana Popovic, a public affairs advisor in Belgium for the NGO Child Focus, is worried about those who resist efforts to protect children’s privacy. Her argument is not to oppose the regulations in question, but to introduce flexibility given the need to protect young people against illegal sexual content.

She refers to the case of a young Belgian girl, aged 10, called Lola to protect her anonymity. During an investigation seventeen years later, police found content of her still being viewed and shared by nearly 12,900 predators. They often use anonymization tools to mask their digital footprints.

In her view, privacy is not a monolithic right. On the contrary, it includes several rights such as the digital right to be forgotten and the right to correct personal data. And, while it might work for adults, it is more complicated for children who are not necessarily aware of all these aspects. For her, refusing to examine the conversations they have could mean missing the all-too-real dangers posed by child abusers.

Without calling into question or cutting back on young people’s privacy, the idea is to be technologically capable of inspecting and detecting illegal acts to intervene as soon as possible to withdraw the offending content and report it to the relevant authorities. Many platforms continue to resist these efforts, considering that such an approach could drive users away and that, technologically, there is no solution today that is truly viable.

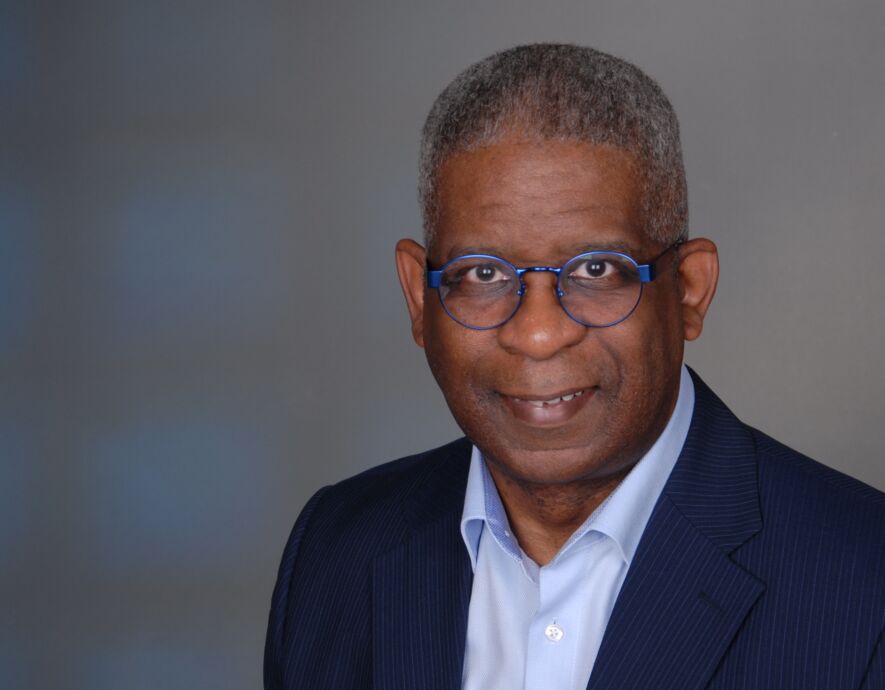

The debate is far from closed. Without a doubt, a mix of technology and human evaluation could be more effective, as Romeo Ayalin, cybersecurity strategist, suggests in conclusion.

the newsletter

the newsletter