- Home

- Digital transformation

- “Ex Machina”: a film that explores the (intimate) relationships between humans and machines…and much more

“Ex Machina”: a film that explores the (intimate) relationships between humans and machines…and much more

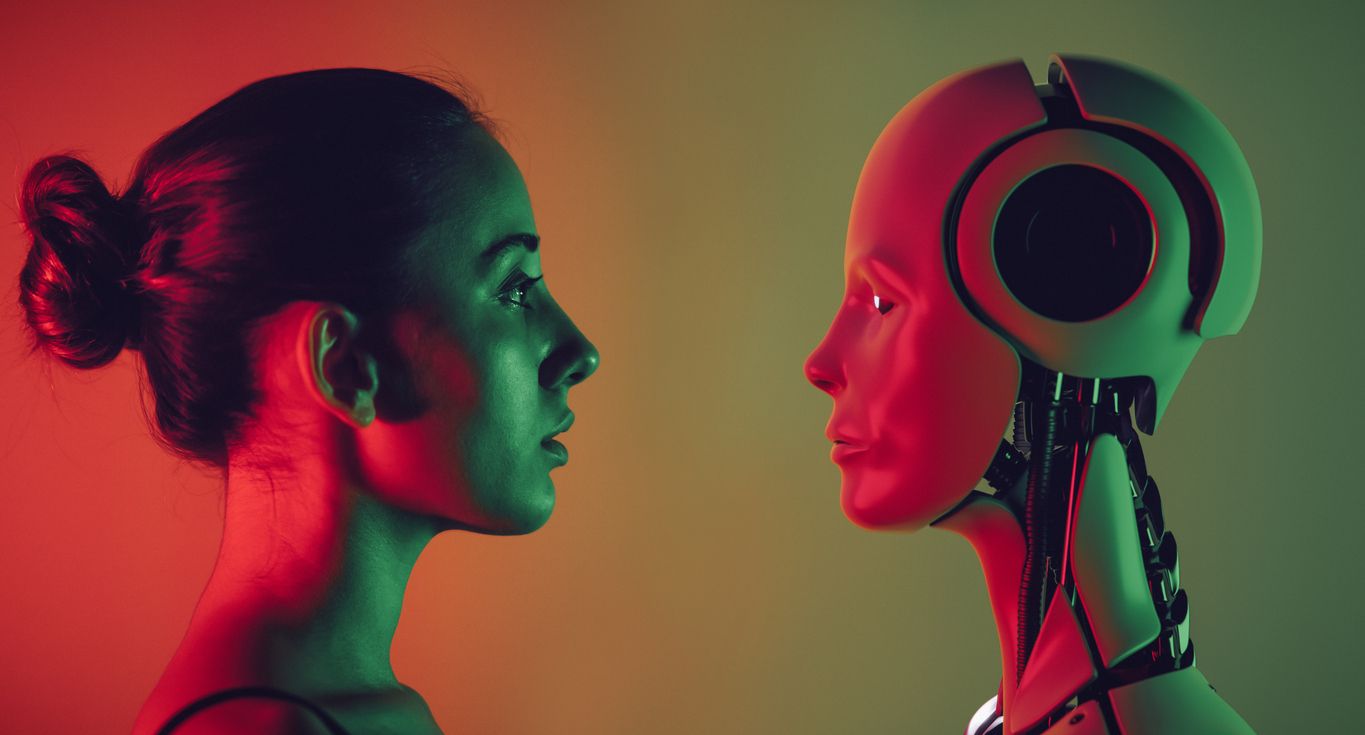

This science fiction movie, directed by Alex Garland, is a « No Exit » that examines the possibilities of human empathy toward an artificially intelligent system. Moreover, it uses artificial seduction to test human psychology.

The film’s plot revolves around three protagonists: Caleb, a computer programmer invited to meet his boss on a property deep in the mountains, Nathan, Caleb’s boss, a computer genius and inventor of the world’s most powerful search engine, and Ava, a robot with a woman’s appearance – referred to as a « gynoid » in robotics jargon – endowed with a particularly advanced artificial intelligence. Ada is the latest iteration of Nathan’s artificial intelligence. He wants to evaluate it, which is where Caleb comes in.

Quickly, the trio finds themselves embroiled in an intrigue that goes far beyond a simple assessment of an AI’s capabilities, given that Ava is identified as an artificial intelligence. The point of the test that Caleb is required to carry out is no longer to determine whether Ava can successfully sustain a conversation with a human – which would demonstrate essentially algorithmic capabilities – but to identify whether she can convince a human that she has consciousness, despite her robotic appearance. More than this, she must convince her interlocutor that she is a person, which consequently means that the gynoid is self-aware.

For us as spectators, the film’s plot goes beyond this legitimate question of Ava’s nature to tackle other themes: human empathy toward machines, seduction and feelings of love between biological and artificial beings. Doesn’t Ava put on a dress to appeal to Caleb, who goes from tester to visitor?

If the assessment’s result is no, Ava is just a complex system that deftly apes human behavior. If the result is yes, however, this recognition means that we must immediately update how we define a person. Currently, the definition of person is « an individual defined by their awareness that they exist as a biological, moral and social being. » To which we would need to add the notion of « artificial being ». But is there a way, multiple ways, to even partially answer the complex question of Ava’s nature? Because, without a doubt, humanity is not far from facing this sort of question.

How can we « evaluate » an AI?

There are currently many procedures designed to assess a machine or artificial intelligence’s ability to sustain (imitate?) a human conversation. The best known of these procedures is the Turing test. This places a human into blind contact with a computer and with another human. If the first person is unable to tell which of their two contacts is the computer, then it means that the conversational software has successfully passed the test.

There are other tests. Each has its own specificities and seeks to refine the methods used to evaluate these systems. They continue to progress through deep learning, whether supervised or not. We can cite at least three other procedures. First, the Winograd Schema Challenge (WSC) requires the system to understand (implicit) concepts of context and culture, human behavior and the mechanisms that lead to logical reasoning. There is also the Marcus test, which questions an artificial intelligence on its understanding of a television program that it has « watched ».

Finally, there is the Lovelace 2.0 test that looks at an artificial intelligence’s potential for creative aptitude. It is named after Ada Lovelace (1815-1852), the mother of computer programming (note the similarity between the names Ava and Ada). All these procedures serve the same purpose: to measure a computing system’s intelligence. We should also note that, in 2023, no artificial system has satisfactorily passed these evaluations, and they do not pose the question of self-awareness.

Redefining self-awareness?

From a human, or even a more broadly biological, point of view, intelligence is the mental faculty to organize reality into thoughts. Humans use speech to share this analysis, whereas as far as we know animals use gestures, until such time as we can decrypt non-human language. So, we can try to evaluate these organic entities’ level of self-awareness, the knowledge that an organism has of its conditions and its moral value. This self-awareness allows them to sense that they exist, that they are present.

So while current trends cause humanity to investigate the presence of this consciousness among the animal kingdom, it will also need to undergo a similar process with artificial systems. The risk for humans is that they will no longer really know who they are, if everything they come into contact with is intelligent and aware.

To return to « Ex Machina », the film proves to be an entanglement of trickery. We witness manipulation after manipulation, a game of fools in which each of the Machiavellian players believes they can control the other protagonists. As it turns out, the film is all about cross-manipulation: Nathan, who officially is trying to carry out a test of the robustness of Ava’s awareness once Caleb has seen her as a machine, is fascinated by the machine’s ability to seduce a human. He is obsessed with the desire that a machine can incite in a human being and by the pleasure that a human can derive from it.

Caleb, who quickly understands that he is the real guinea pig in the experiment, surprises Nathan by anticipating the traps and safeguards that the genius has set up around him. But it would appear that it is actually Ava who is manipulating everyone: surpassing the expectations that we would expect of a machine like her, she does everything she can to exercise her freedom as a supposedly self-aware person to go out and discover the world. Does this take the form of some sort of conquest? The film says nothing about this.

Let’s take advantage of the closed-door setting’s confusion to admit that, despite Ava’s mechanical nature, her intelligence and self-awareness were conclusively proven. This artificial being could represent a new, artificial form of life.

Right now, biological life is an evolving concept, especially with the pressing questions about extraterrestrial life. However, we assign life a variety of qualities and capabilities: growth, reproduction, response to the environment, ability to maintain an internal equilibrium (homeostasis), metabolism of energy, an ability to adapt or react to environmental changes, movement, communication and the ability to learn.

At the end of the film, Ava checks a lot of boxes: she is able to interact with and adapt to her environment. She metabolizes energy to develop her personality and awareness, she can move and communicate, and she can learn. All that is lacking is growth and reproduction. She can replace a damaged organ. Is this a form of growth? As for reproduction, once again we know nothing of her intentions when she leaves alone to discover the world at the end of the film.

The story of Ex Machina, both similar to and different from the present, legitimately asks us to question the intentions of those who are developing these systems, which are currently just narrow artificial intelligences that lack self-awareness. They remain far from the notion of general intelligence. Besides, isn’t the use of the word « intelligence » somewhat misleading? Unless it was just chosen for marketing purposes.

the newsletter

the newsletter